Nodal Points Digest #1: Deep Creativity, Cellular Minds and Fiction Machines

Thoughts on sharing as a practice, AI art's own medium, new book from Greg Egan and Borgesian machines

Borrowing Charles Broskoski’s usage of this word, nodal points are “any thing that has a hand in shaping how you see the world”. In other words, they are catalysts for future action. They inspire.

Nodal points are the search results and our interests are the search algorithm. Like other computation my cognition performs, I sense the usefulness of the search and yet have a hard time describing it. A list is always a good first try:

systems that displays open-endedness, or emergent behaviors: evolution, human and machinic creativity, artificial life;

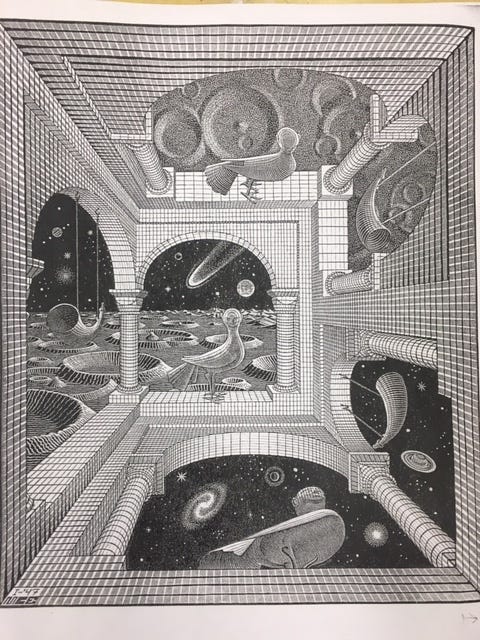

artworks that are complex systems themselves, a continuity between a variety of intelligent life forms, inanimate objects and technologies;

tools to think about and play around with complex systems; to think the unthinkable.

I guess a running theme is “systems”. Especially those that evolve, learn, adapt; those that surprise me; those that seem to almost have their own mind.

I strive to learn more about this type of systems (as a researcher), and create them (as an artist and engineer). I want to gaze at them and be fascinated. One can be fascinated by one’s creation, right?

“Nodal Points Digest” is the type of posts that I started writing mostly for myself: it is a liminal space between the raw, unprocessed Twitter/Instagram/Are.na bookmarks and the final works they inspire. Writing about the nodal points forces me to understand better not just them, but also why I’m drawn to them. This newsletter, a space that I grow and share my nodal points and, hopefully, the fruits they bear, could potentially be one of your nodal points. Just as yours could be one of mine. A constellation of minds connecting.

I am warmed by this vision.

Deeper AI Art

I’ve been slowly catching up this series: MIT Lectures: Deep Learning for Art, Aesthetics, and Creativity

In one lecture Joel Simon talks about how, as an aspiring sculptor, he picked up a 3D modeling software to do digital sculpting and yet it didn’t feel quite right:

Conceptually something was wrong. And I realized these are digital attempts to mimic something analog. But they weren’t truly computational. It wasn’t sculpting in the medium of computer.

Then it struck me: prompted image generation, which represents most of the AI art we see, isn’t really creating in the medium of AI, either.

This series of talks are from people trying to explore AI’s own medium.

Take Joel Simon’s “Dimensions of Dialogue” for example. Two neural networks are challenged to communicate with each other by inventing new writing systems. It is a problem already solved by humans independently, in various times and ways. How would artificial neural networks approach this problem? What are some invariants between these alphabets that shed some light on the essential traits of why languages work?

We can also venture into the space of qualia construed by our linguistic structure. In “the Latent Space of Colors”, I extracted all the colors listed on Wikipedia and obtained each color’s embeddings through one of the OpenAI models, making a semantic color space purely from human languages. It seems to support the claim that large language models encode not just relational information, but also those relations that are grounded in our perceptual space.

AI is the most interesting, complicated tool we’ve ever invented, the closest thing we’ve ever made to a human mind. And we are at a time that it’s at our liberty to peek deeper into the layers of neurons in the architecture, to spin up instances of minds and do fantastical philosophical experiments that previously are only "thinkable” - that’s wild.

Different Forms of Cognition

Greg Egan’s new book, “Morphotrophic”, imagines a world where the relationship between us and our cells is much more keenly felt: a girl lost her limbs after some of her “cytes” relinquished their allegiance by melting away from her body; regular body “swapping” practitioners shuffle their cytes hoping that can keep them strong and ever flourishing; cytes (not neurons) can contain a whole person’s consciousness.

In acknowledgement, Egan cites Michael Levin as one major inspiration: “it should be possible to induce the formation of metazoan-like bodies in an otherwise unicellular organism…Similarly, it may be possible to induce dissolution of an entire metazoan body by appropriate changes of bioelectric dynamics.”1

Indeed, “Morphotrophic” reads like a speculation of Levin’s research where cellular intelligence is acknowledged, albeit still yet to be fully understood, and harnessed to create outcomes that human have long been dreaming of: regeneration, longevity, even shapeshifting. In Levin’s works, ample examples of regeneration in real life from other species: flatworms that can regenerate and grown into an anatomically complete worm from any parts; through metamorphosis, caterpillars dismantle their brains but still retain prior behavioral memories as butterflies. Stories like this stretch our definition of conventional intelligence: brainless, neuronless, just cells and their networks display cognitive traits like goal-directed actions and memory.

In another paper “Biology, Buddhism, and AI: Care as the Driver of Intelligence”, Levin et al. propose a frame work of intelligences that liberate the agents from biology-based evolutionary lineage. My belief is being able to recognize intelligence in various forms will be one of the biggest progresses we make. To simulate a system where people can experiment with truly extending their definition of intelligence, I made the installation “The ${self} Is A Computational Boundary”: virtual creatures, driven by other humans, AI and simulated collective intelligence, surrounds the viewer. The viewer observes, guesses and responds to the ecosphere with their body movement.

LLMs as Borgesian Fiction Machines

In his paper “Borges and AI”, Bottou offers an alternative perspective on LLMs to treating them as sentient and intellectual threats: they are fiction machines that do not concern either intention or truth, only narrative necessity:

The ability to recognize the demands of a narrative is a flavour of knowledge distinct from the truth. Although the machine must know what makes sense in the world of the developing story, what is true in the world of the story need not be true in our world. Is Juliet a teenage heroine or your cat-loving neighbour? Does Sherlock Holmes live on Baker Street? [8] As new words are printed on the tape, the story takes new turns, borrowing facts from the training data (not always true) and filling the gaps with plausible inventions (not always false). What the language model specialists sometimes call hallucinations are just confabulations.

Ok, so what do we do with fiction machines? What’s the use of stories any way?

As children we start our learning through fairy tales; in school we learn through stories lessons of every subject, including science. We receive our news through stories. We tell stories with our family and friends. Peter Winston, a student of Minsky’s, even goes as far as arguing storytelling is the one thing that separates human intelligence from that of other primates.

We even tell stories to ourselves: our inner dialogues are constant conversations within our mind, taking on the form of self-reflection, rehearsing future events or rehashing past ones.

LLMs weave living fictions that can be actualized.

A few thoughts for experiments:

prompts for cognitive reframing, a psychological technique that identifies and changes how someone views the world around them - this could be augmented by LLMs. There is already evidence that LLMs made better reappraisals than humans when reframing negative scenarios;

collective lore-making: a lore is constantly being written and rewritten by LLMs while members of the lore community decide which part to take;

or, just for the sheer fun, forks out as many as possible paths from a single point and lets your mind be constantly bombarded by the infinity of the possible worlds.

Michael Levin, “The Computational Boundary of a ‘Self’: Developmental Bioelectricity Drives Multicellularity and Scale-Free Cognition”, https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2019.02688/full

Hey Kat, I'm on a similar path and really enjoyed the links shared here. There's such a rich language in describing what AI can mean to us

this was a very difficult post for me to get through, because I found myself in awe after every paragraph (and off on a rabbit hole, taking copious notes). After the first paragraph I thought to myself, "wow, I love this, this feels like a genre of writing where people just collect the things they find very beautiful and meaningful and share it, and explain why it moves them, and i wish there was more of this"

and then I read that this is very much the intention and I feel like I'm in the right place!!!

> Writing about the nodal points forces me to understand better not just them, but also why I’m drawn to them. This newsletter, a space that I grow and share my nodal points and, hopefully, the fruits they bear

- I have never heard of are.na before today, but I am shocked because it sounds like something I have been yearning for. "A garden of ideas, tumblr meets wikipedia", and also it's been going for 12 years?? (I also love love love that there is no "one founder", it's always "one of many founders of are.na". Makes it feel like a resilient network.

Reminds me of an Alan Kay snippet I recently stumbled on, 27:00 to 29:00 (https://youtu.be/NdSD07U5uBs?t=1630), about how the internet is the closest thing we've built to a biological system:

> "the internet has grown by 10 orders of magnitude without ever breaking, the internet has no center, it's replaced all of its atoms and all of its bits at least twice since it started"

I love this part so much:

> Indeed, “Morphotrophic” reads like a speculation of Levin’s research where cellular intelligence is acknowledged, albeit still yet to be fully understood

I used to dismiss fiction as a purely leisurely activity. Recently I've found great utility in it, when I pair it side by side with a non-fiction reading material. For me this accidentally started with "Three Body Problem" & Fall Of Civilizations, where I realized the fun fiction story was actually capturing something very real, about history, and the end of the world, that I couldn't see reading just the non-fiction because of a lot of preconceived notions about ancient civilizations.

(another example is "Children of Time" + "I Am a Strange Loop")

I now see fiction as something like, psuedocode? It is literally "false code" in that, you can't run it. But in a lot of cases, it is "more true" than the actual implementation (if I can't separate the algorithm from the details of the implementation)

Morphotrophic + Levin's work sounds like an amazing pairing together.

> LLMs weave living fictions that can be actualized.

> A few thoughts for experiments:

I love this so much. I keep thinking a lot about a genre where I as the author don't actually write words that are ever read by the reader, instead I just build this world, and lots of rules and dynamics and interpersonal relationships. And the reader discovers this, by trying things in this world, by asking questions. Like, I think this is what good writers already do: (1) you envision a world (2) you envision characters embedded in that world (3) you extract some facts in that world, that are all consistent with the underlying universe, such that the reader has that sense of depth about what is happening in the rest of the world.

But I think steps (2) and (3) can be done communally and collaboratively. Like, there IS a narrative, I as the reader am not creating the narrative, so much as excavating it (which I think is what I now do anyway in my head, when I'm really engaged with a novel, and it lingers with me long after)

wrote a bit about this here: https://x.com/DefenderOfBasic/status/1770058783605014687