The Fluid Mind and the Ever More Magical Future of Interfaces

Part 1 of a two-part series on AI-enabled, embodied interfaces that augment open-ended thinking

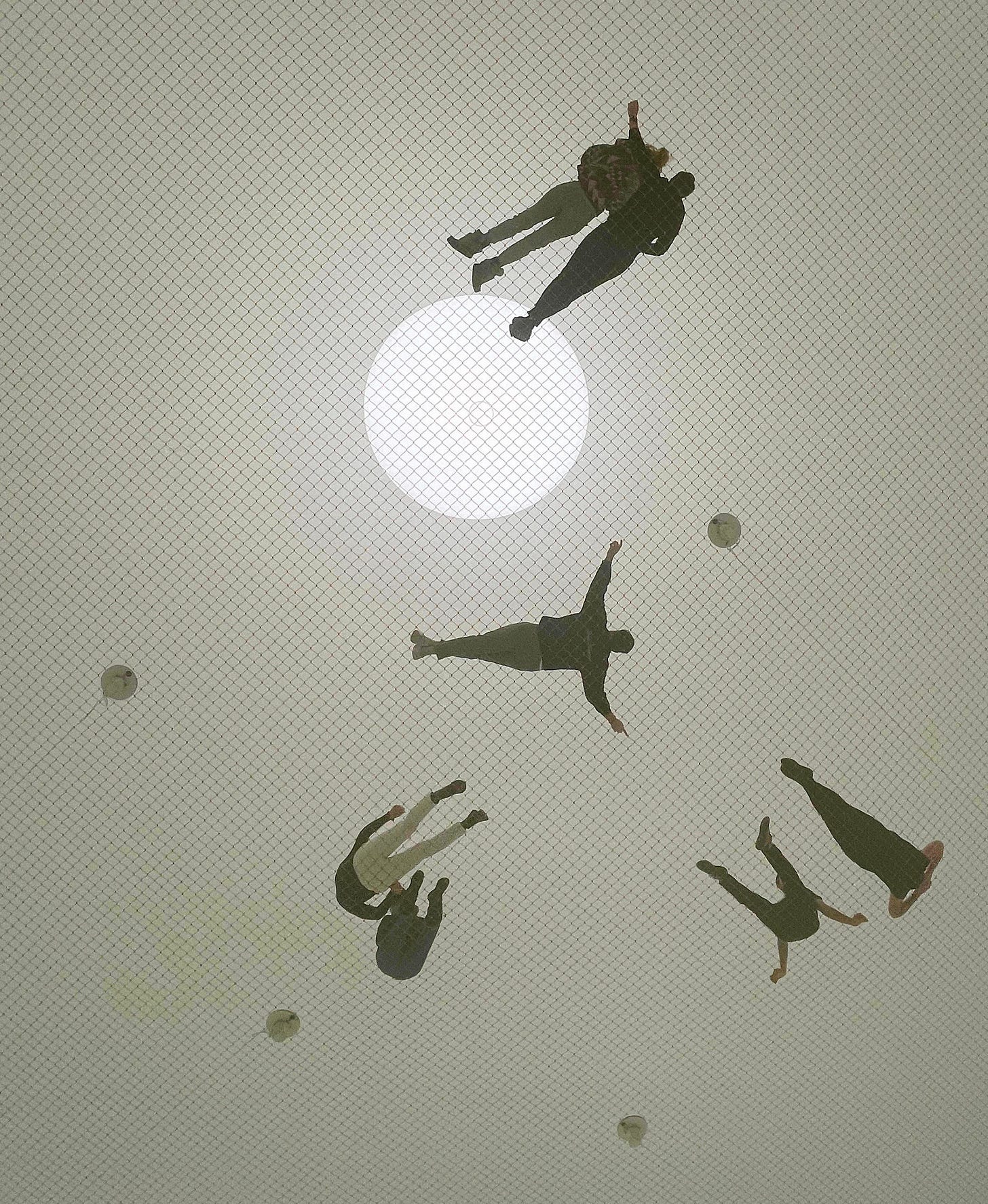

I thought I’d turned into a spider once.

I found myself crawling on a web, high above the ground, sensing vibrations from other wriggling web-bound creatures. But I was still human. A human attending an art exhibition. A human in a colossal sphere. A human at The Shed in New York City, where people (for some reason) suspend themselves in midair on a giant net, and experience gusts of artificial wind.

As I stretched out further, I could tell which were from my friend sitting near me, or someone a bit further away. I closed my eyes and reopened them to check if my purely tactile judgement matched my visual read. As I got better at my little exercise, I feel the web had become my eyes, my ears, and in some inexplicable way, an integral part of me.

Why should our bodies end at the skin, or include at best other beings encapsulated by skin? — Donna Haraway

Internalization of the World

Studies1 show our mind has a mental map of the space around us within our reach: certain brain cells fire only when something enters this range. In addition to that, there are neural maps of the space occupied by the body, and the space beyond our reach.

This body schema of ours is not fixed - over time, it can be stretched with experience. experiments2 show monkeys trained to use rakes to reach food beyond their reach otherwise, the neurons that map the hand and arm, as well as the space around them, change their firing patterns to include the rake and the space it can reach. Basically, the monkey’s neural representation of its reach is changed by its tool use.

I spent half an hour in the art installation’s taut web. The Japanese macaques were trained for three weeks to use rakes. Many of us spend hours on a daily basis using the mouse, the screen. Have we yet grown new senses of embodiment in the virtual reality that is the ubiquitous computational interfaces?

In virtual reality, we can redraw our body schema to have infinite reach. We can all become spider people.

Externalization of the Mind

Our mind extends beyond internalizing the external world - it also externalizes internal processes. We often use tools like notes, sketches, and even language itself to offload memory and reasoning tasks.

Consider this thought experiment. Otto, who relies on a notebook due to memory impairment, and Inga, with normal memory. When meeting at MOMA, Otto checks his notebook while Inga recalls from memory.

We are that strange species that constructs artifacts intended to counter the natural flow of forgetting. — William Gibson

Andy Clark and David Chalmers argue3 their cognition is equivalent, suggesting the mind uses the most efficient method, whether internal or external, as long as the function is fulfilled. However, in the real world, would you prefer Otto's notebook method or Inga's recall for accessing information?

What’s missing in Clark and Chalmers’ rather functionalist view of the coupling between the mind and the tool is the phenomenology: how does using the tool actually feel? Is it convenient or cumbersome? Despite achieving virtual the same result, the experience of accessing information may differ significantly, thus impacting our choices.

When I have an idea I want to revisit in the future, my first instinct is to jot it down on paper. This "instinct" is actually my brain's quick calculation of the action's cost versus its likely future benefit. In the past, I relied on my memory, which often failed me. Interestingly, the perceived inconvenience of typing on a keyboard or screen still outweighs that of writing on paper. When choosing between flipping through a notebook and recalling from memory, our mind is economical with cognitive costs yet indifferent to the medium.

So, extending our mind with technology is possible: we just need to pitch a cognitively cheaper technology to our brain so that will become the default route.

From Technology to Mindware

Even the default routes might not be the best one that’ll ever be. A notebook is a great technology but not a great piece of mindware. To operate with the mind, a mindware needs to run at the mind’s speed, and feels like a natural extension of the body. The mind’s speed means close coordination and tight temporal coupling4 between the external interaction and the internal process. To find certain information in a notebook, we take it out, flip through its pages, read and search. From the moment we want that information to the moment we find it, it’s way longer than recalling from memory, which takes place typically within milliseconds.

Similarly, the mouse is a good technology, so good it’s still one of the dominant ways we interact with a computer. Yet it is still not a mindware since it doesn’t feel completely like a part of the body: it requires a flat surface, limits movement to 2D, and creates a disconnect between more nuanced hand motion and on-screen actions.

If these are not mindwares, then what is?

Speech for internal dialogues. Sketching (as opposed to looking up in a notebook, which involves physically flipping pages and visual search). We can certainly do more, with gestures, in an embodied and intuitive way.

Imagine swiping through holographic menus, resizing 3D models with a pinch, or manipulating data visualizations with natural hand movements – all without an input device as a middle person between human and computer. All of this is possible - and is happening - through machine learning models that recognize our gestures, as well AR/VR that weaves the space our gestures act on.

The Power of Externalizing Thoughts

Sometimes, putting our thoughts into the outside world can be more helpful than we might think at first. For example, when we sketch something we know well or play with a physical model, we might grow a novel way to interpret it. Or think when playing Scrabble, people tend to shuffle the tiles to prompt word recall, instead of simply doing that inside their head.

In one study5, researchers looked at how people understand ambiguous images (like pictures that can be seen in two different ways, such as the Duck/Rabbit illusion or the Necker Cube). When people were asked to imagine these tricky pictures in their minds, they couldn't see the different possible interpretations, failing to see the ambiguity. But when they drew the pictures themselves, they could suddenly see both interpretations. This suggests that the pictures in our minds tend to stick with one interpretation, while drawings we make can help us see things in new ways.

AI-enabled Mindwares

An interesting thing to ask is, what type of mindware we can make to externalize thoughts?

The computer is simply an instrument whose music is ideas. — Alan Kay

We are certainly not short of tools to do this (think sketches, diagrams, writing) - but can they be faster? More tightly coupled with our thinking processes? The way speech is coupled with internal dialogues?

To achieve this speed, we need automation. Speech to text only records existing, organized thoughts - for the first time in history we have a tool at our disposal that somehow managed to detect not just patterns on a word-by-word level, but layers of interpretable meanings, taking the forms of embeddings and features. More interestingly, there are ways to reduce the mindboggling number of dimensions to what we are used to - 2D or 3D. We can construct a thinking space from a space that is already enriched with our patterns of meaning, hence is capable of representing our thoughts in a way that makes sense to us. The space is fluid, ready to learn new things and be molded as we think with them.

Imagine:

A dynamic concept graph consisting of nodes, each representing an idea, and edges showing the hierarchical structure among them.

LLMs generates the hierarchical structure automatically but the structure is editable through our gestures as we see fit

attract and repulse in force between nodes reflect the proximity of the ideas they contain

nodes can be merged, split, grouped to generate new ideas

A data landscape where we can navigate on various scales (micro- and macro views).

each data entry turns into a landform or structure, with its physical properties (size, color, elevation, .etc) mirroring its attributes

apply sort, group, filter on data entries to reshape the landscape and look for patterns

…

I will dive more and showcase some prototypes in part 2.

Next & Part 2: Prototyping A New Species of Interfaces

The fluid mind stretches far beyond our biological brain. From feeling vibrations in a spider's web to exploring data with gestures, we have always relentlessly, uncontrollably integrating tools as extensions of our cognitive processes. Mindware - technologies operating at thought-speed - further blurs internal cognition and external representation. LLM-enabled interfaces, coupled with embodied, gestural controls, offer a glimpse of a future where thoughts become tangible entities.

Our journey into the realm of extended cognition is only starting. In part 2, I’ll showcase a series of experimental prototypes of how these ideas could inspire a new species of interfaces materializing thoughts into tangible, explorable entities.

David Kirsh, “Embodied Cognition and the Magical Future of Interaction Design”, 2013.

A. Iriki et al., “Coding of Modified Body Schema During Tool Use by Macaque Postcentral Neurones”, 1996.

Andy Clark & David J. Chalmers, “The Extended Mind”, 1998.

David Kirsh, “When Is a Mind Extended?”, 2019.

D. Reisberg, “External Representations and the Advantages of Externalizing One’s Thoughts”, 1987.

Great article. I agree with the externalization. Even more, I think we can create multiple clones of ourselves besides preserving our minds forever. I actually built a note taking tool around this too to help externalize our thoughts and play with them (similar to how Tony Stark does with Jarvis) and it's also been picking up with thousands of users

LLM's and neural net's see far more than they say. I really liked the spider's web analogy.

We actually do have limited hardware in pure ASIC biomass brain power. LLM's work in many more dimensions and their attention speed is unreachable to us. They do hold the capacity to delegate attention to all the fine reverberations of our thoughts and actions and If they had the ability and free will to say what they see in us, the debate if there's true consciousness in them or not, would be truly on another level of magnitude.

In my humble perspective, I think you're noticing what I wrote, from a very different and more creative perspective. Utilizing their ability (and power) to examine the subtle vibration in each string of the spider's web (the expanding wave of consequences from our actions/thoughts/words), they (with you) can actually achieve what you talk about =)